Got a big question on technology and security for “Byte-sized Diplomacy”. Send it through here.

We are hearing a lot about AI and elections, should we be concerned?

Elections are a fundamental part of democracy. However, we can’t afford to miss the impacts of AI across the practices of democracy, especially in what’s been described as a “bumper election year” around the globe. Alongside polls, parties and politics, we need to understand neurotechnology, knowledge access, the data economy and corporate tech governance.

The latest call to curb harm from AI mis-and disinformation came from UN Secretary-General António Guterres. Trackers are identifying noteworthy instances of AI generally and generative AI specifically as it goes head to head with political campaigns and elections. In the United States, an AI voice clone of President Joe Biden targeted New Hampshire constituents telling them not to vote. Countless examples of AI-generated images and text of political candidates have appeared including deepfakes of Donald Trump and Narendra Modi, as well as “softfakes” generated to improve candidate appeal of Prabowo Subianto and Imran Khan.

Australia’s Home Affairs Minister Clare O’Neil warned last month that “technology is weakening democratic fundamentals such as free and fair elections and open political debate”. Populations worldwide are worried, Australians among the most.

The latest Digital News Report shows that concern in Australia about misinformation increased to 75% this year, up from 64% in 2022. The Ipsos AI monitor shows 52% of Australians think AI will make disinformation on the internet worse. A 2024 Adobe study, called Future of Trust, found that 78% of Australian respondents think misinformation and deepfakes will impact elections.

It is important not to unnecessarily hype this issue or suggest foreign interference when there isn’t evidence.

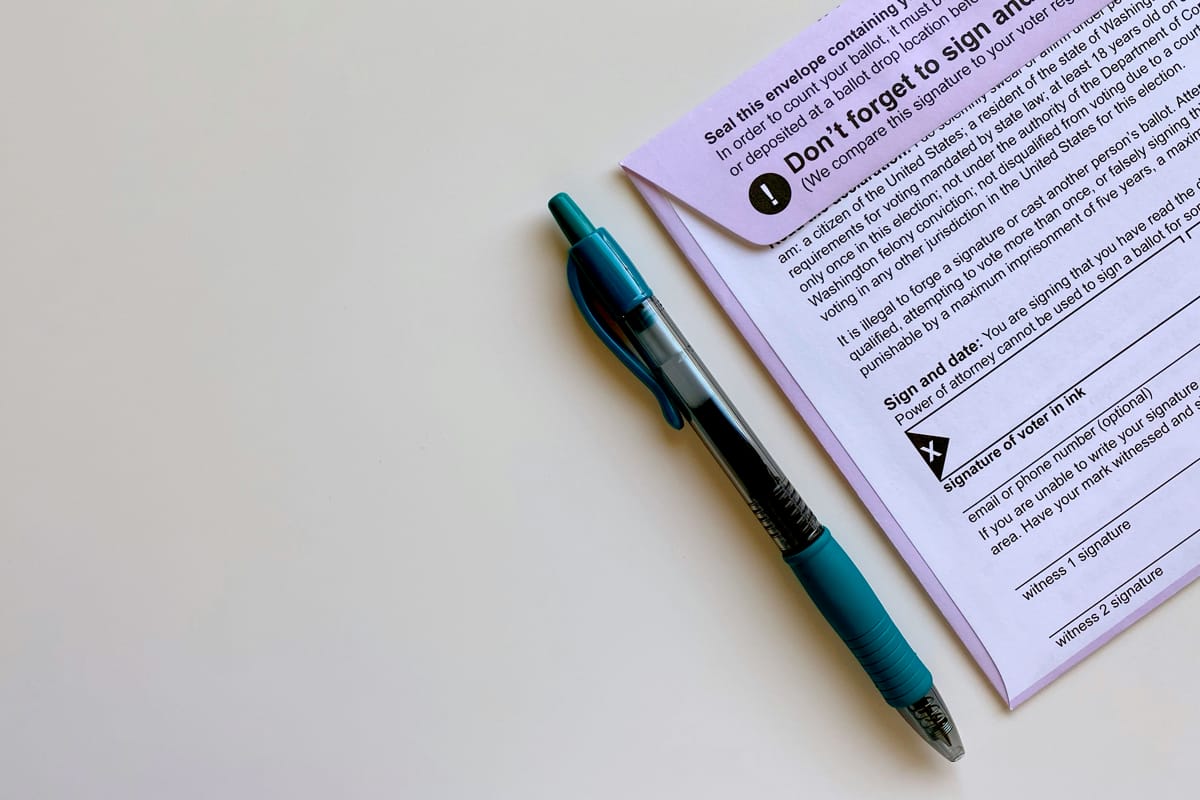

Australian electoral law presently offers only limited protections to combat deepfakes, disinformation and AI threats. This has led to increasing calls to ban AI generated election materials. Electoral commissioner Tim Rogers noted that the Australian Electoral Commission (AEC) “does not possess the legislative tools, or internal technical capability, to deter, detect or adequately deal with false AI generated content concerning the election process”. The AEC was not setup to establish truth in politics – who could? – but to maintain an impartial and independent electoral system.

Rogers also noted a decline in AEC's previously very good relationship with social media companies across the past 18 months. This aligns with an overall deterioration of resourcing and responsiveness in dealing with serious challenges. Election integrity and enforcement priorities are in flux at some of the biggest social media platforms. Some platform policy changes have systematically amplified authoritarian state propaganda and fake news.

Companies no longer appear to be acting together to disrupt foreign influence operations on their platforms. There seems to be an approach equating content moderation and censorship. Recent assessments of influence operations on Meta and OpenAI are a good start but require transparency.

The silencing of voices from public space is a primary concern. Women, LGBTQI and Indigenous people experience online hate speech at more than double the national average in Australia. Such experience, especially for women, is global.

AI threats to democratic processes and institutions are emerging across elections in campaigns, information and infrastructure, although the evidence of AI impacts on specific election results is limited, which is unsurprising given the recency of its widespread adoption. It is important not to unnecessarily hype this issue or suggest foreign interference when there isn’t evidence. But ensuring election integrity is not just about making sure voters have access to verified, truthful information, even though this is critical.

Australian democratic process and bureaucratic institutions are based on four key ideas; active and engaged citizens, an inclusive and equitable society, free and franchised elections, and the rule of law for all. Australia not only has compulsory voting, but we also enforce it – exceptional in the current context.

However rather than focusing only on elections, it is vital to improve the resilience and integrity of the information environment across democratic processes. The world is on the cusp of further technological and social changes and ensuring the settings in support reflect of our values and interests is critical.

Two emerging threats should be top of mind: changes in the way we access and assess knowledge, and the expansion of consumer neurotechnology applications.

Many AI tools – and especially voice assistants – deliver outputs that remove the context that helps us to assess information accuracy, source and integrity. This is changing the way people interact with information and create knowledge. Understanding the inputs and processes of AI and its algorithmic influence and interference potential, as well as who shapes knowledge production is vital to mitigating its disastrous potential.

Tech companies developing foundational technologies such as AI are pushing the boundaries on acceptable and appropriate corporate governance. The choices of these companies and their processes, procedures and practices shape the information environment. But these companies are unelected and largely opaque. Systemic changes to big tech business models will take time, but all signs suggest they are impending. This will help get the digital backbone of our society right.

The concern around AI also misses the role of human agency. Tighter restrictions on political content would help – but critical to its impact is distribution of the message and who it is targeting. Watermarking genuine or AI-generated content is one great provenance suggestion, but the idea is only one of many needed.

Having an election doesn’t guarantee a democracy, but you cannot have a democracy without an election. AI and emerging technologies are causing friction to democratic institutions and processes. In the face of this friction, we need to steady our institutions, forums and processes. Hopefully the focus and interest in AI in elections will translate into legislative, policy and industry changes to strengthen the integrity of our institutions and information environment.