One week in, the information war that has accompanied, and to an extent framed, the military invasion of Ukraine has not gone as Vladimir Putin may have hoped.

But it still might.

So far, the use of social media during, and as part of, the conflict has been encouraging for those in Ukraine, in Russia and elsewhere in the world who oppose the invasion.

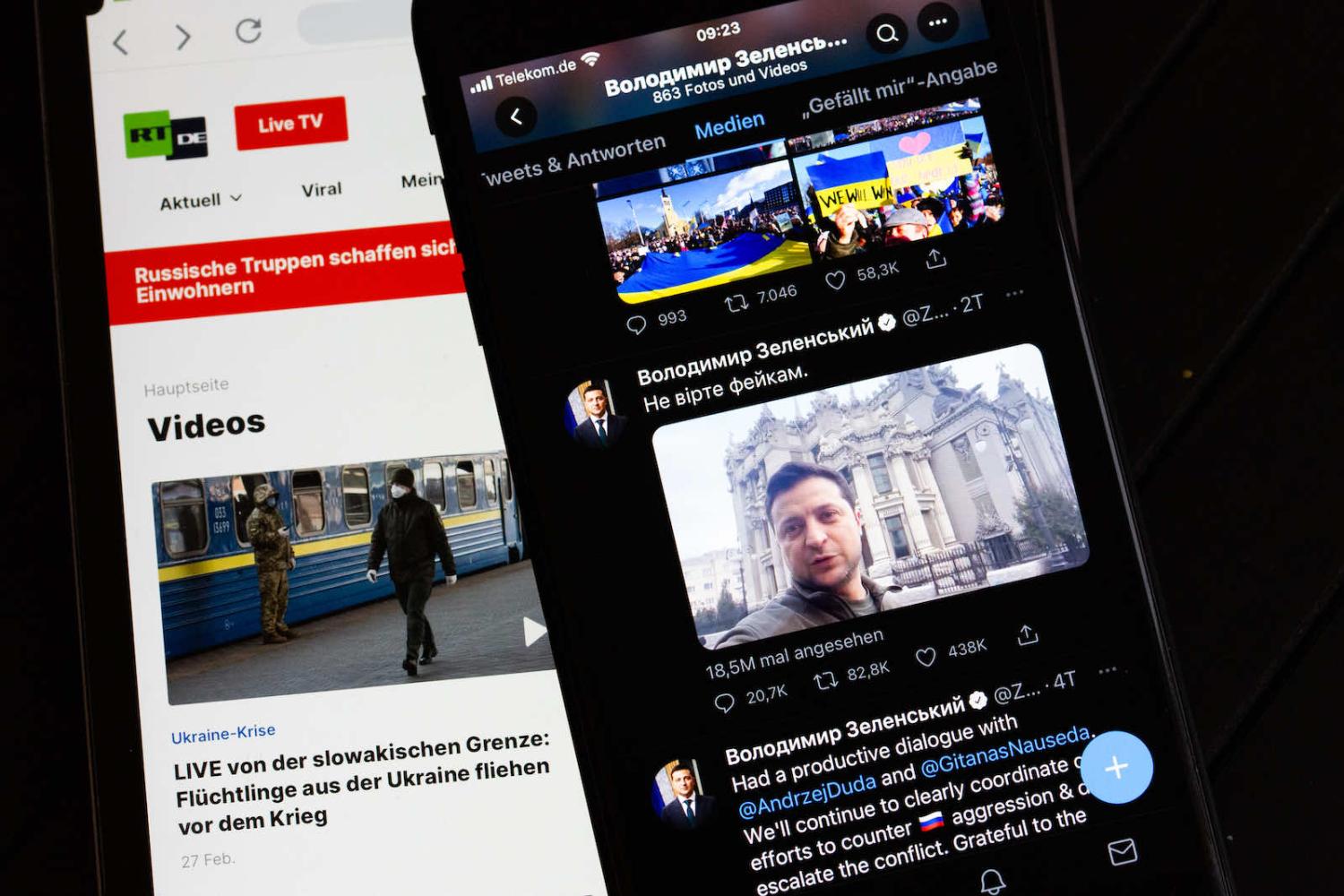

Ukrainian President Volodymyr Zelensky, a former actor and comedian with communications savvy, posted defiant and inspiring videos from besieged Kyiv using an ordinary smartphone. Ukrainian officials have posted videos onto the Telegram channel Find Your Own, showing captured Russian soldiers – leading to outcry from their families, unaware their sons and brothers were a part of the invading force.

Ukrainian cyber professionals are being recruited and organised via encrypted apps to assist with cyber defence and countering disinformation. Social media influencers have switched from posting lifestyle advice or dream travel destinations to tutorials about making Molotov cocktails. Meanwhile, international hacker communities such as Anonymous claimed to have taken down or taken over several Russian and Belarusian state websites, including the Kremlin and the state broadcasters, and to have stolen and published sensitive Russian defence data.

Alongside those speaking truth to power are those seeking attention and building online profiles by hitching their wagon to the cause with no regard to the veracity of their content.

Social media platforms, under pressure after carrying and profiting from Russian disinformation, have also made some efforts by banning, labelling or demonetising Russian state media channels.

The online community of amateur investigators including organisations such Bellingcat and the Centre for Information Resilience have tracked movements of Russian forces and sought to debunk Russian propaganda. This can be done by using the metadata (such as geolocation data and timestamps) of online images and videos, as well as by employing specialist knowledge about uniforms, weapons systems, buildings and landscapes, or using reverse image and audio searches to track down the original sources, which can turn out to be years old and from a different country. Images from increasingly powerful and accessible commercial satellites and even from Google Earth and Google Maps are combined with footage posted on social media from security cameras and smartphones.

The upshot is discredited attempts at disinformation have been ridiculed and turned into memes by opponents of the war, who can re-mix, re-edit and re-distribute their parodies.

It wasn’t really supposed to turn out like this.

Your tweet was quoted in an article by Vice News https://t.co/x0pKn9V9qW

— Recite Social (@ReciteSocial) February 23, 2022

Based on Russian successes in the past, including previously in Ukraine but also influence operations in the United States, the United Kingdom and elsewhere, there was a reasonable assumption that, in both cyberwarfare and information operations, Russia had the capability to critically harm infrastructure, utilities and public opinion.

The damage, so far, seems limited – and it’s left some experts bemused and seeking explanations.

Reasons offered include Russian miscalculation, underestimation of Ukrainian resolve and international solidarity, and the lessons learned – by governments and online networked movements and activists – about how to conduct information warfare. American author Peter Singer lists several reasons for the success of the anti-Putin information campaigns, including the use of power images of heroism, defiance and mockery. This also followed the decision by Ukrainian, European and US agencies to widely disseminate information about Russian military movements and fact-check Russian disinformation in the lead-up to the invasion.

This kind of tactical communication, where the lie is exposed before it has a chance to spread, is called “inoculation theory”, or pre-bunking.

If successful, it provides a template for future international information warfare defensive operations.

If.

There are reasons for initial optimism to be tempered.

One is the mess, not only the mass, of information. Alongside those speaking truth to power are those seeking attention and building online profiles by hitching their wagon to the cause with no regard to the veracity of their content. Thomas Rid, author of Active Measures, for example, explains how wading through the clutter takes expertise and time. Most people have neither, adding to the sense of confusion.

The rapid proliferation of information of varying quality suits the Russian model for information warfare, described by the RAND Corporation as a “firehose of falsehood”, which aims to confuse, not to convince.

Moreover, there are many constituencies in this information war – Russian, Ukrainian, global – and they matter differently. Putin’s position depends on few of them. The audiences that matter, the ones that will shape the conflict, may be few, such as elements of the Russian elite.

So winning the information war may be important, but not sufficient, for military success.

As American tech observer Casey Newton shrewdly notes:

Control of the narrative and control of geography are two distinct things. If Russia ultimately takes over Ukraine, this war will have also revealed the limits of what internet organising can do to stop a global superpower.