Kamala Harris calls herself a “diversity hire” and refers to Joe Biden as senile. It’s an extraordinary statement, caught on video and widely circulated online.

Except it’s not real.

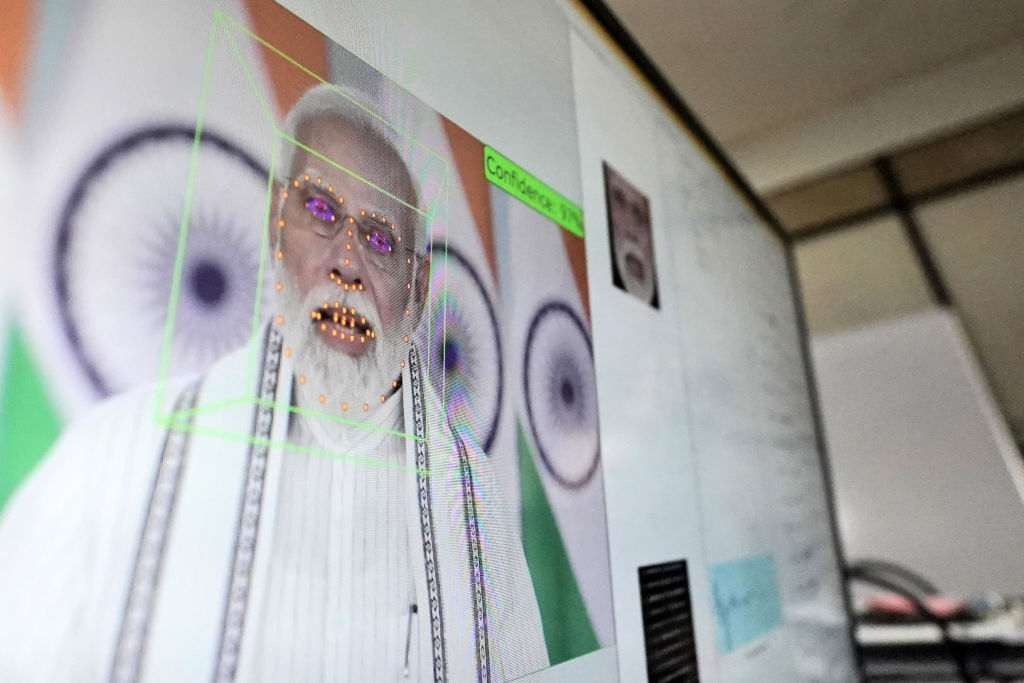

Deepfakes, hyper-realistic videos or audio of people saying and doing things they never said or did, created using artificial intelligence, have emerged as the latest tool for electoral manipulation. Early versions were comically fake. Newer content, powered by open-source tools including Dall-E, Meta AI and X’s AI image generator Grok-2, is alarmingly realistic.

In this bumper election year, with more than half of the world’s population going to the polls, AI-generated content has sent political disinformation and concern over electoral legitimacy into hyperdrive. If the events in elections worldwide are a preview of what we can expect in Australia, are we ready?

AI-generated deepfakes are a step change in electoral manipulation. Slovakia was shocked by a deepfake audio of an alleged conversation between Progressive leader, Michal Simecka, and a local journalist discussing vote buying just hours prior to its election. In Bangladesh, a deepfake video of independent candidate, Abdullah Nahid Nigar, claiming to withdraw from the campaign on election day spread rapidly across social media.

Among the many sounding the alarm, researchers at George Washington University have warned of daily “AI attacks” posing a threat to the US general election. Some experts describe this as a weaponisation of AI to deceive voters, in which misleading or fabricated content is created, seeded and amplified using generative AI, bots and fake social media accounts. The consequence is making it harder for people to discern fact from fiction, manipulating public opinion, and disrupting democratic processes by discouraging voter turnout or shifting votes away from popular candidates.

Russia has a long history of using disinformation to project power and influence abroad and is widely considered one of the more aggressive and adept exploiters of the new technological landscape. US intelligence agencies assess China and Iran are growing in expertise and willingness, aligned to Russia’s covert online influence playbook.

Australia has experienced deepfakes since 2020, including the simulated voices of politicians such as Foreign Minister Penny Wong and Finance Minister Katy Gallagher used to promote a range of investment scams. In the last few months, there have been deepfake political videos and audio aplenty in the run-up to the Queensland state election, created and published not by foreign governments but by major parties mocking their political opponents.

The Australian Electoral Commission has been sounding the alarm for months. It has warned that deepfakes and AI-generated disinformation content will plague Australia’s next national election. That’s because it’s not illegal to make a deepfake. The AEC has neither the legislative tools or internal technical capability to do anything about it.

In the United States, California has taken action by regulating the use of deepfakes and other AI content in elections. But a federal judge has blocked the law, citing potential violation of America’s first amendment, which protects freedom of speech. Enforcement of the new law now sits in limbo.

Empowering voters to take control of their media consumption is a proven approach to countering disinformation.

In Australia, despite acknowledging the need for guardrails in AI systems used in an electoral or political setting, the interim report of the new Select Committee on Adopting Artificial Intelligence refused to mandate watermarking and credentialing of AI content ahead of the next election. As long as the requirements remain voluntary, they will likely be ignored by those purposely seeking to undermine the electoral system. At this rate, Australia may not see mandated AI controls in an election until 2028.

Most counter-disinformation approaches are long plays: media literacy education, legislation, supporting local journalism, fact-checking, and the difficult work of removing inauthentic asset networks. But a response is needed now.

There are two options Australia can implement to minimise the harm caused by deepfakes during the next election.

First, a program empowering voters to report suspected deepfakes. A deepfake detection program would not be led by the AEC: it risks harming the Commission’s independence and impartiality. Instead, an independent organisation working alongside any of the multitude of companies developing deepfake detection models could take the lead. Citizens submit suspicious content not to verify the claims, but to check the probability of it being AI generated regardless of content. Empowering voters to take control of their media consumption is a proven approach to countering disinformation.

The second option requires political party will: a transparency commitment. Political parties have already expressed concern about deepfakes in elections, so it should not be a stretch for them to commit to refraining from posting AI-generated content in their campaigns. A public pledge not to use deepfakes or AI-generated materials fosters trust with voters and upholds the integrity of political discourse. It signals to the electorate that the party prioritises authenticity and accountability over sensationalism.

Together these initiatives can create a stronger defence against the rising tide of disinformation by cultivating an informed electorate that is better equipped to recognise deception.

Deepfakes are intended to be convincing. As electoral manipulation tactics become more sophisticated, it’s harder than ever to distinguish fact from fiction. As a result, voter anxiety about AI-generated disinformation is real. As Australia gears up for its next national election, events around the world serve as cautionary tales and underscore the need for proactive measures against deepfakes and AI-generated disinformation. Practical steps can be taken to safeguard democracy – we don’t need to wait until 2028 to fortify our electoral integrity.